The web is a tool for sharing knowledge, connecting people and organizations, making known events that someone would like to keep hidden. Communication between people has become instantaneous at a global level and citizen participation has an additional tool. We have the possibility to freely access an immeasurably greater amount of information than we did in the past, an immense mass of data, like an encyclopaedia with no visible limits and, therefore, a gigantic progress for the knowledge of humanity, a universe of information in real time from distant sources available to everyone. Each of us uses all this on a daily basis and we could not do without it anymore.

However, it must be kept in mind that together with its potential, the network hides a dark side: false information, racist phrases, tweaked photos, manipulation to pollute electoral campaigns, hatred, false accounts, conspiracy theories. Distorted uses that sow division, prejudice and preconceived hostility. In fact, technological progress, which is making a huge leap forward with artificial intelligence, brings with it not only benefits, but also risks.

The discussions on the so-called fake news that have been concerning Western societies for some years now are destined to make a further leap forward: a new kind of distortion of reality is taking hold, one that il will be much easier to fall for and much harder to disprove through classic journalistic investigation and careful research of sources. These are the so-called deepfakes, i.e. videos manipulated through artificial intelligence. This type of videos, which are now starting to spread in Europe as well, derive from two recent developments in technology: big data and artificial intelligence, combined with a media ecosystem in which it is no longer possible to distinguish what is true from what is false in a society hostage to simulacra and fictions.

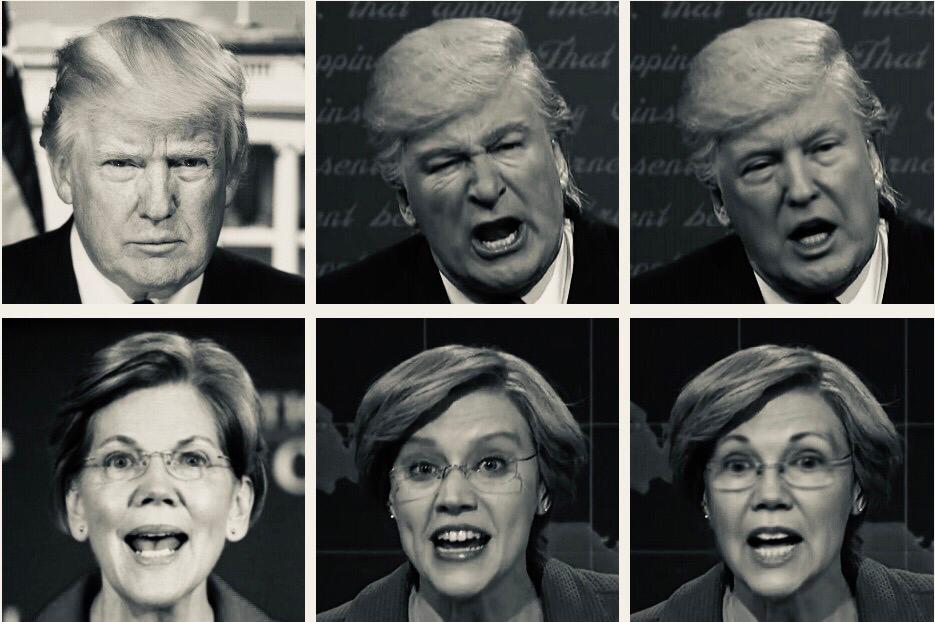

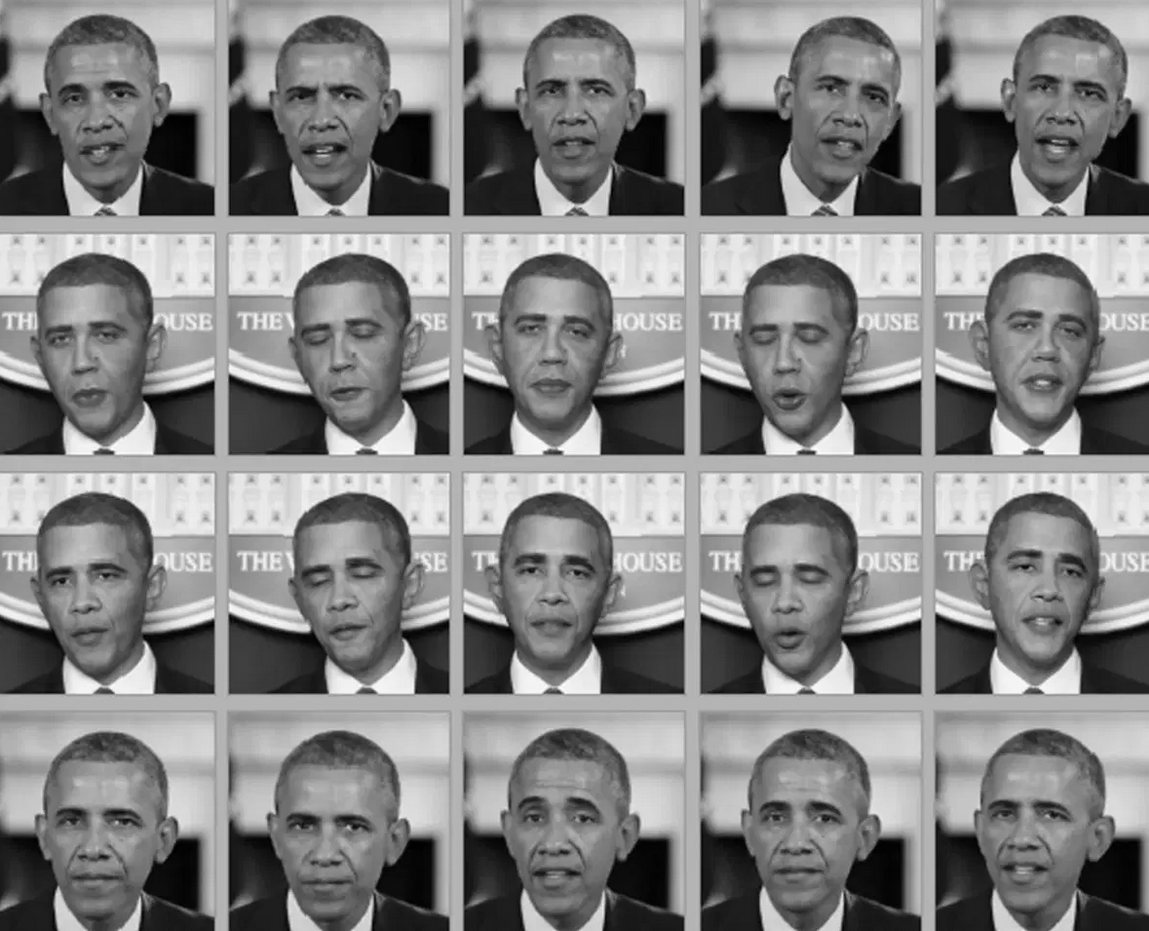

A Deepfake is a fake video generated by an AI (artificial intelligence) from progressively modified real content. It is therefore not a simple face swap, as you can do with Snapchat filters: in deepfakes, artificial intelligence learns how a face is structured in a video and makes it move independently, creating facial expressions in real time and in sync with another audio (or video) different from the original content. The deepfake uses deep learning algorithms and neural networks (i.e. an artificial intelligence that processes in layers, learning from the process itself and gradually improving the final result) combined with facial mapping software, for the creation of videos that modify identity. All of this at a very low cost in terms of processing time and technological equipment and skills. In practice, within certain limits, one does not have to be an expert to produce a credible fake: the software takes care of it all.

The term deepfake started to spread mainly in the autumn of 2017, when a Reddit user used the pseudonym "Deepfakes" to publish fake porn videos of famous people

Initially, deepfake technology was developed through academic research and was applied in the production of high-budget films, as an evolved form of special effect to reproduce deceased actors, as was the case with Paul Walker's character in The Fast and The Furious 7, reproduced partly digitally and partly with the physical presence of the actor's two brothers. The turning point was in 2017, when a community called deepfake was formed on Reddit in which fake videos created by members of the group were shared. One of the first harmless and viral uses was the publication of deepfakes in which the faces of the actors (or actresses) were replaced by that of Nicholas Cage. But the term deepfake started to spread mainly in the autumn of 2017, when a Reddit user used the pseudonym "Deepfakes" to publish fake porn videos of famous people on the site. They were videos in which the face of a porn actress or actor was replaced with that of a Hollywood movie actor, through a technique called "generative adversarial networks" or GAN. From that moment the name "deepfakes" soon began to define all videos made in this way: "deep" refers to deep learning, one of the learning procedures of artificial intelligence, while "fakes" simply indicates the characteristic of these videos being fake in nature. To deepfake videos was also dedicated a special channel on Reddit, where anyone could publish their "creations", but in February 2018 Reddit decided to shut it down, following the many protests received.

Although deepfakes entered the public debate at the end of 2017 because of these porn videos, videos manipulated through artificial intelligence had already been spoken about with concern for several months, mainly because of the implications they might have on information. In July 2017 an article by The Economist recounted the phenomenon when it was still unpopular, explaining how a simple computer code could modify the audio or images of a video to make people say or do things that never happened in reality. What in July of that year seemed to be a technology only available to a few computer experts became in a few months accessible to a wider audience thanks to the diffusion of very user-friendly software. 1

The risk of encountering a fake video and believing it to be true has alarmed newspaper editors, who are now working to counter this new type of fake news. The Wall Street Journal has set up its own task force to examine the deepfakes in circulation and instruct the editorial staff to recognize them. There are various techniques used by those who create these videos: they range from simply replacing one face with another to making a person say or do things in a video that never happened, for example by transferring facial and body movements from subject A to subject B. A concrete demonstration of the dangerousness of what can be done using artificial intelligence was given by a video manipulated almost imperceptibly and posted on Twitter by the White House spokesperson to show the clash between US President Donald Trump and CNN journalist Jim Acosta during a press conference on November 8, 2018 and it shows Acosta lay his hands on the woman in charge of taking away his microphone.

The video was analyzed by Storyful, an online news verification organization, who discovered her manipulation. In fact, a few frames were added to the original video as Acosta placed his hand on the White House employee's arm, with the aim of emphasizing the act and making it appear violent. In all this, if on the one hand the information should counteract the spread of deepfake videos, and discover their manipulations when they are vehicles of false news, on the other hand there are also those who are using them to experiment with new forms of communication, creating a short circuit that makes the matter even more complicated. In China, the state news agency Xinhua has produced the world's first completely false news presenter. It is a digital version of the real presenter, Qiu Hao, whose facial movements, voice and gestures have been carefully replicated: his double can speak either Mandarin or English, and read any news, without the risk of making mistakes but also without having to think about what he says, with all the ethical repercussions that this entails.

In the USA, where the echo of the Cambridge Analytics case is still burning, the debate is open: Hany Farid, professor at Darthmouth College and pioneer of PhotoDNA (a technology used to block pedo-pornography) warns that "we are decades away from having a "forensic technology" capable of unambiguously separating the true from the false".

The basic rule is, above all, one: put your trust in established sources of information that have credibility.

The techniques to generate deep fake, arouse great apprehension because, just as they are preparing to reach in a short time a level of sophistication such as to prevent you from distinguishing truth from lie and to be characterized by an elementarity of use that will make them exploitable by anyone, as if they were simple applications. At the same time, there is a lack of shared and effective solutions to contrast the deepfakes on the different levels that overwhelm at the precise moment in which they are put on the Net and circulated. If science is still powerless, there are those who would like legislative intervention to enforce the laws on defamation: however, applying it to social platforms or to players acting outside the geographical boundaries concerned does not seem easy. For this reason, one of the ways in which we can defend ourselves against false information is to carefully select the sources of information. If the content is on a source you don't know, it is good to search online to see the history of the newspaper. You need to check when it was created and by whom, if there are discussions or news stories circulated before they have been confirmed and what kind of information is circulated or shared by the source. Secondly, it is important to see which other titles the video in question is being broadcast on, and whether the news is picked up in the following days by other accredited sources. The basic rule is, above all, one: put your trust in established sources of information that have credibility. On this controversial issue, network giants have not been kept waiting and have started to spread guidelines for the publication, or in some cases for the removal, of deepfake videos. Twitter will communicate that the content is digitally altered and will give people the opportunity to deepen the news. Facebook's proposal is instead the most drastic one to remove fake content. Meanwhile, Google's research labs have produced and released a deepfake database to simplify the identification of artificial content. 2

In the time of post-truths and manipulation of information through social media, the new possibilities offered by this technology generate some apprehension in political circles. One of the fears is, of course, that "propaganda" experts use deep fake technology to generate compromising or acquittal videos for various misconduct, or to convey messages that the victim on duty would not share. In fact, the political objective of those who make deep fakes has soon stabilized, making the situation certainly darker and more disturbing. As it happens for fake news, in fact, the diffusion of false content attributed to public figures is a way to drive public opinion, to confuse it and to increase more and more distrust in institutions and information sources. It is not only the image that is altered: there are software such as Lyrebird or Adobe Voco that artificially create a speech from an analysis of a person's voice. In addition, there is also a technical factor to consider: politicians tend to stand on a podium or sit, under a constant light, and this factor makes the creation of deep fakes even more effective and the result is therefore more credible. In a world where you can make politicians say anything, you have to pay attention to another side effect, which is to use deep fake justification for uncomfortable videos that are broadcast on unofficial channels.

It is a threat to the relationship of trust between citizens and institutions and therefore to democracy and to national and international security.

It is important to reflect on the threats that the deep fake phenomenon poses to data security and privacy, to law as a whole and to the way in which technology and the web are used by politics. It is a threat to to the relationship of trust between citizens and institutions and therefore to democracy and to national and international security. In order to understand and manage this phenomenon it is necessary to carry out an accurate research trying to find the causes of deep fake. It is necessary to establish what degree of sophistication has been reached with the technology behind the deep fake phenomenon, which applications exist on the market today to create extremely realistic fake videos and whether their realization can already be considered within the capacity of the mass of users. In conclusion, it is essential to understand if there are sufficient technological and legal tools to contrast deep fakes and how they can be applied. After considering these data, we can reflect on what scenarios could occur as a result of the spread of deep fakes within the current global social and political context and with which consequences. 3

This complex research would help us to interpret the malicious exploitation of advanced artificial intelligence techniques of deep fakes that turn false information into potential cyberphysical weapons among the most devastating, capable of undermining the security of nations. Considered as potential cybernetic arms, deepfakes must necessarily be carefully monitored and studied to highlight useful elements to draw guidelines for their proper management. If one exists.